5 Real-World Software Nightmares That Code Integrity Verification Could Have Prevented

Learn from the past to prepare for the future with these 5 infamous cyber attacks (and their disastrous consequences) that could have been prevented with code integrity check.

Operational downtime, reputational damage, and financial losses were the three main consequences of 86% of the successful cyberattacks that Palo Alto Network’s Unit 42 team analyzed in 2024. In Q1 2025, the number of cyber attacks per organization is on the rise, having increased by 47% year-over-year.

Code integrity verification (e.g., hashing as part of the digital signature process in code signing) is one way you can minimize the risk of such attacks. This approach empowers your customers and employees to verify that the software they’ve just downloaded is the same as how your developers created — that it hasn’t been modified or infected with malware since it was cryptographically signed.

In this article, I will be your ghost of the past. I will take you back in time to explore five real-world cybersecurity incidents and share how code integrity verification could have mitigated their impact or, perhaps, entirely prevented them. So, join me as I turn back the clock.

5 Real-World Examples That Code Integrity Checks Could Have Prevented

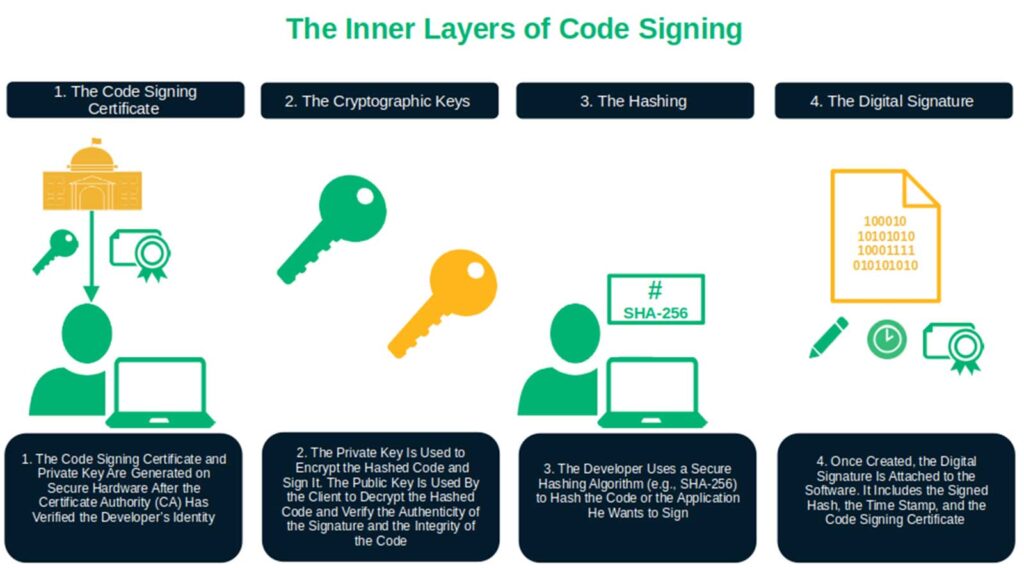

Code integrity verification is like the bouncer at the entrance of a private club. It ensures that your users and customers run only trusted and unaltered software on their machines. This is done by leveraging the power of code signing and its inner layers:

- Code signing certificates: Digital certificates used to sign software, applications, executables, and scripts. They confirm to users that the code they’re downloading or installing is authentic and hasn’t been modified without authorization.

- Cryptographic keys: The private and public keys are a string of random characters paired with a robust cryptographic algorithm used to encrypt and decrypt data. They’re at the heart of code integrity verification. In code signing, the developer uses the private key associated with the code signing certificate to encrypt and sign the code hash (i.e., digest). When the user downloads or installs the software, their client uses the corresponding public key to decrypt the digest and verify the authenticity of the signature.

- Hashing: It’s the first step of the code signing process. The developer runs a cryptographic hash function over the code to transform it into a fixed-length string of characters (i.e., hashed value or code). The developer signs this resulting hash value, which doesn’t change unless someone modifies the signed code (even a tiny bit), using their cryptographic key prior to sending it to the recipient. If the hash value remains the same on the recipient’s end, it confirms to users that the signed software has not been tampered with.

- Digital signatures: These signatures are embedded within the software. They include the signed hash value, a time stamp (optional), and the digital certificate used to sign the code. The user’s operating system leverages this information to execute the code integrity verification process. This operation confirms your identity as a developer or publisher and that the software hasn’t been altered since it was signed.

What happens when the door to your private software club is left unattended? Nothing good, I assure you. The same concept applies to software security.

So, how can you prevent the same types of issues identified by Palo Alto from happening to you or your organization? Let’s find out by taking a journey to visit five cyber attacks of the past and learn from them.

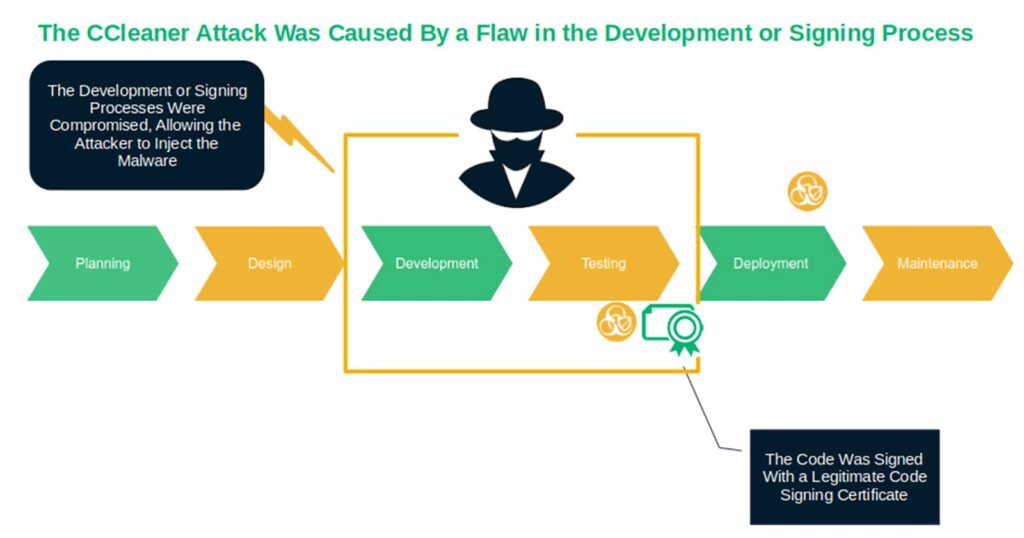

1. The CCleaner Attack That Infected 2.3 Million Devices (2017)

The bad guys injected a malicious payload into a legitimate version of CCleaner (v.5.33), a popular system optimization tool. The malware infected more than 2.3 million computers, including those of major technology companies.

Talos’ analysis of the attack shows that the software publisher digitally signed the executable with a valid code signing certificate. However, Talos noticed that the signature’s timestamp was applied approximately 15 minutes after the first signature, indicating that the development process might have been compromised.

Nevertheless, if the users had checked the digital signature and timestamp, they might have spotted the timing difference and avoided installing the malicious software.

More importantly, Avast, the software publisher that acquired Piriform, (CCleaner’s original author in July 2017) should have checked the software for malware before publishing it to their website. If they had, the company could have implemented necessary security checks throughout the whole software development process that could have caught the issue and mitigated further damage more quickly.

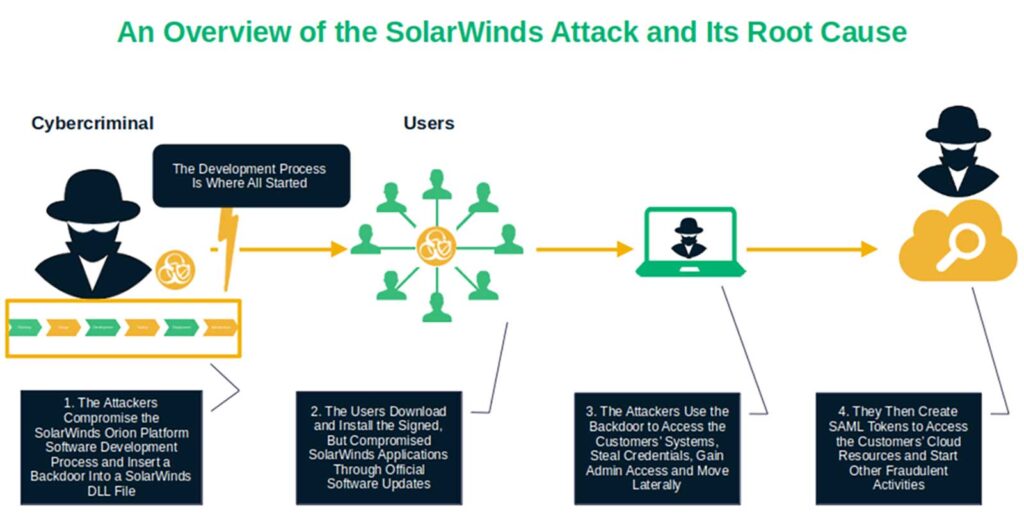

2. The SolarWinds “SUNBURST” Attack (2020)

Three years later, cybercriminals infiltrated SolarWinds’ network and accessed its build server. Once inside, they identified and exploited several vulnerabilities in the company’s software build and review processes. This enabled them to insert malicious code into the Orion platform’s update packages.

The nefarious individuals slipped their poison into the software before the software signing process had even started. But what makes matters worse is that they were able to do so without anyone noticing, using the SUNBURST backdoor to deploy TEARDROP and RAINDROP malware loaders.

Simply put, the malware spread like fire. Within a matter of days, the attackers sent out updates that touched thousands of SolarWinds’ customers — everything from government agencies to various private organizations (including several other cybersecurity companies).

So, once again, SolarWinds developers did sign the software. However, they were let down by their company’s software development life cycle (SDLC)’s build and review processes and policies. (The company didn’t mandate code signing throughout the SDLC or in its CI/CD practices. Unfortunately for SolarWinds’ customers, the company only used code signing at the end of the development process.)

That corroborates the fact that, while code signing is an excellent tool to protect software from tampering, it can’t win the battle for a secure software supply chain alone. Fear not, though. We’ve got a solution for that, too. Keep on reading.

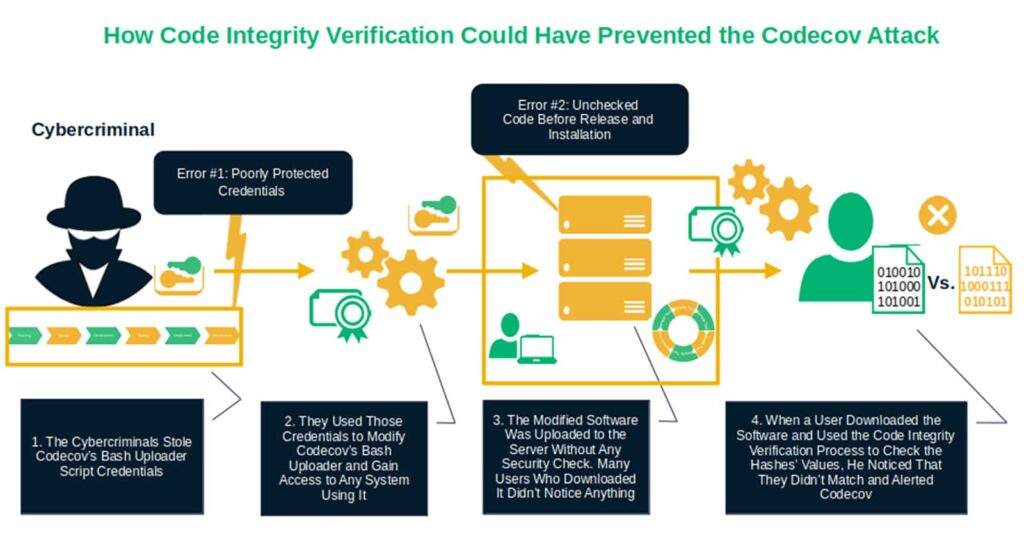

3. Codecov: The Needle in a Code ‘Haystack’ That Went Undetected for Months (2021)

Less than one year passed, and a group of very skilled black hats launched a sophisticated attack similar to SolarWinds. This time, they used their skills to extract credentials from a Codecov Docker image creation process. They then quietly modified the company’s Bash Uploader script. As this language-agnostic reporting tool works with most operating systems (e.g., Windows, Linux, and macOS) and popular developer platforms such as GitHub, the impact was enormous.

They did such a jolly good job that they earned code execution access to any system that was using the Codecov code testing script. Thankfully, in this case, nothing lasts forever. This is true even for a well-orchestrated scam involving compromised code that was previously signed using a code signing certificate.

It was actually a code signing digital signature that helped put an end to this catastrophic attack. One day, a customer manually checked the code integrity verification values of the Bash Uploader available on GitHub. When they realized the hash values didn’t match those listed on the site, the customer alerted the company. The game was over thanks to a discerning user and code integrity verification.

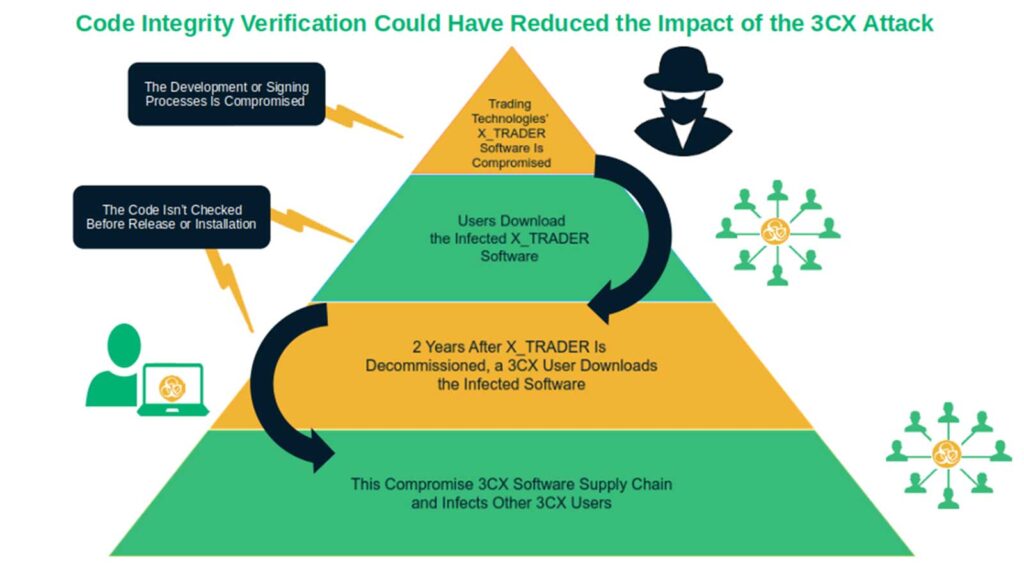

4. 3CX Breach: The Double Supply Chain Compromise (2023)

If you think that a single supply chain attack is bad, imagine the damage that a series of such attacks can do. Unfortunately, that’s precisely what victims of the 3CX Software breach experienced firsthand in 2023.

According to Mandiant, Google’s cybersecurity firm subsidiary that ran the investigation, it was the first time the company saw a software supply chain attack lead directly to another software supply chain attack.

So, how did the bad guys pull it off? They injected malicious code into a legitimate (and digitally signed) version of the 3CX Desktop App, a chat, video, voice, and conference call software for Windows and macOS devices. (This software was used by an estimated 12+ million users worldwide.)

However, according to Mandiant, the infection started a year earlier. A 3CX employee had installed X_TRADER on their computer. Thes professional trading software package, which was developed by Trading Technologies, had been tampered with and distributed via a previous software supply chain compromise.

What a small world it is, huh? As 90s late-night infomercials hosts always loved to say: “But wait, there’s more!” To add insult to injury, the X_TRADER platform was discontinued in 2020 — a full two years before the software was compromised. However, the decommissioned code was still available for download from the legitimate Trading Technologies website in 2022.

In addition, the unused package was still officially signed by “Trading Technologies International, Inc.” with a certificate that would have expired in October 2022. These combined factors were a disaster waiting to happen.

So, both malicious codes were signed. Does it mean that code signing is inherently flawed? Nope, quite the opposite. Remember: Code signing protects your software from tampering, but only from the moment you apply your digital signature and timestamp to it. Furthermore, if you don’t have processes and policies in place to check the integrity of the code prior to signing it, you risk endorsing compromised code.

That reinforces the fundamental role of code integrity verification throughout the software development lifecycle. From the software’s cradle to its grave, any additions and changes to code must be verified, especially before allowing your software to be signed. This way, it’s caught before your product is released, downloaded, or installed by customers or other end users.

Now, before returning to the present day, let’s look at one last incident that happened recently.

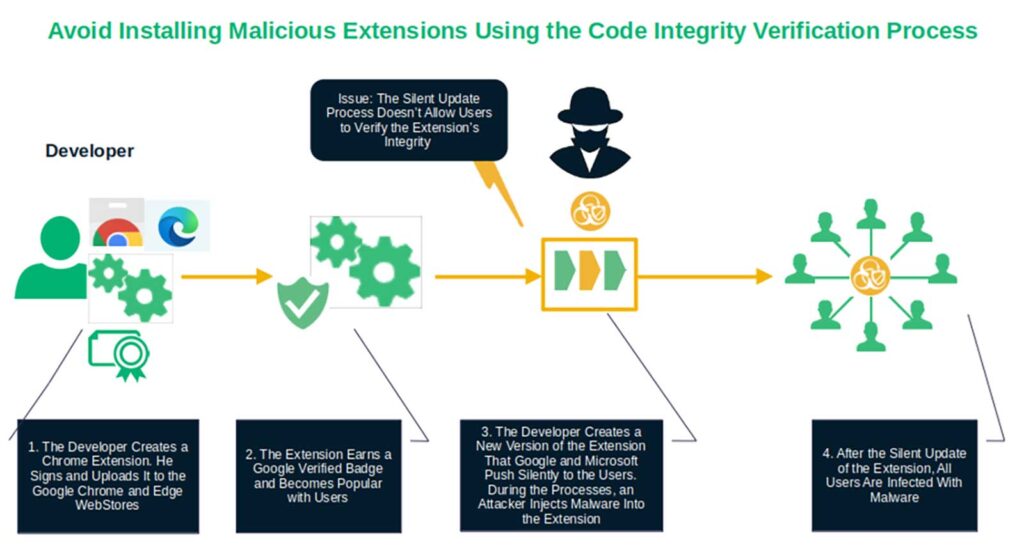

5. The Malicious Chrome Extensions With 2.3 Million Downloads (2025)

New year, new attack. In the first week of July 2025, Koi Security discovered and reported 18 seemingly separate malicious Chrome extensions available for download on Chrome and Edge stores. What makes matters worse is that several had Google’s verified badges or featured placements and had garnered over 2.3 million downloads.

As it turns out, these extensions were all part of one centralized attack campaign called RedDirection, which used malware to monitor all browser tab activities. Based on their investigation, it appears that all of the infected extensions were legitimate and unaltered when the developers uploaded them initially. (Apparently, they remained so for years.) This is why Koi’s researchers believe attackers compromised the code of these extensions later and not when it was initially released.

That part was easy for the bad guys, given that Google’s auto updates provide users with the newest versions of the software without actively informing or requiring users to do anything. This is a major flaw, as it denies users a chance to perform a manual code integrity verification (e.g., compare the downloaded code’s hash with the original hash) before installing updates to the software.

Google did remove some of these infected extensions. But this is just a drop in the sea of malware-laden software. And anyway, would you really let an automated process install something on your device without being able to run any code integrity verifications?

4 Actions Linked to Code Integrity Verification That You Can Implement Right Now

Here we are, back in the present. It’s clear to see the invaluable role that code integrity verification plays in preventing a business’s worst nightmare from occurring. It could have helped prevent everything from costly data breaches to devastating malware infections.

But there is still something that companies directly affected by the events could have done to mitigate the impact. And guess what? You can implement these measures, too.

1. Secure Your Access Credentials

No matter how tempting it is, never, ever default or hard-code credentials and secrets.

- Follow password policy best practices.

- Replace all default usernames and passwords with unique, strong logins.

- Opt for multi-factor authentication (MFA)

- Go passwordless, if you can.

2. Implement a Code Integrity Verification Policy

Provide comprehensive guidance on your code integrity verification policies and processes.

- Enforce a policy that requires code integrity verification via a combination of code signing and event logging.

- Ensure this policy doesn’t apply only to production and distribution, but to every single step of the development process.

3. Secure Your SDLC

Harden your software development lifecycle.

- Set up continuous monitoring and logging. It’ll allow you to keep an eye on who accesses what and which changes are made throughout the development process.

- Use static code analyzer tools such as GitHub Advanced Security or OWASP Automated Software Security Toolkit (ASST). Most of them are free and they help you identify coding mistakes and security vulnerabilities so that you can fix them immediately.

- Shift security left by embedding security throughout the SDLC and in your CI/CD practices.

4. Secure Your Organization With PKI

Take full advantage of public key infrastructure (PKI) to secure your public and private resources. This versatile framework enables organizations to authenticate users and devices, protect the confidentiality of data, and ensure its integrity.

The best part? It isn’t limited to code integrity verification. PKI has numerous applications for virtually every organization. In addition to signing your software and applications with a code signing certificate, PKI enables you to:

- Protect your websites and virtual private network (VPN) access with secure socket layer/transport layer security (SSL/TLS) certificates.

- Secure your emails and authenticate devices with email signing certificates.

- Authenticate and protect the integrity of your sensitive documents using a document signing certificate.

- Authenticate and enable secure communications for the IoT and “smart” technologies across your IT ecosystem.

PKI is the ace up your sleeve for end-to-end security. Learn what it is, how it works, and where you can implement it.

Final Thoughts About 5 Real-World Software Nightmares That Could Have Been Prevented with Code Integrity Verification

Cybersecurity threats aren’t going away. The bad guys will always look for new tactics and weaknesses to exploit. Code integrity verification mechanisms like hashing, code signing, and SDLC continuous monitoring and logging are all actions that can help your company when and if the worst happens.

So, now that we’re back in the present, start embracing these code integrity verification best practices. It’ll help you guarantee a more secure future for your software, organization, and customers.

2018 Top 100 Ecommerce Retailers Benchmark Study

in Web Security5 Ridiculous (But Real) Reasons IoT Security is Critical

in IoTComodo CA is now Sectigo: FAQs

in SectigoStore8 Crucial Tips To Secure Your WordPress Website

in WordPress SecurityWhat is Always on SSL (AOSSL) and Why Do All Websites Need It?

in Encryption Web SecurityHow to Install SSL Certificates on WordPress: The Ultimate Migration Guide

in Encryption Web Security WordPress SecurityThe 7 Biggest Data Breaches of All Time

in Web SecurityHashing vs Encryption — The Big Players of the Cyber Security World

in EncryptionHow to Tell If a Website is Legit in 10 Easy Steps

in Web SecurityWhat Is OWASP? What Are the OWASP Top 10 Vulnerabilities?

in Web Security